The NIH falls for FC: How did this happen and is it reversible?

Some six weeks ago, the National Institutes of Deafness and Communication Disorders (NIDCD) sponsored a conference entitled “Minimally Verbal/Non-Speaking Individuals With Autism: Research Directions for Interventions to Promote Language and Communication.” The NIDCD is a member of the U.S. National Institutes of Health, and so is funded by Congress. All of us citizens and taxpayers, therefore, should be concerned by the fact that this event served—albeit only in part, and mostly indirectly—to promote facilitated communication. It did so primarily by showcasing two “non-speaking” autistic individuals who type out grammatically well-formed, syntactically sophisticated, vocabulary-rich messages on keyboards: messages that show no evidence of the language learning impairments associated with non-speaking autism.

Once the conference’s program became available, several autism experts expressed concern that an FC user was listed as a panelist, that the event featured “S2C proponent Vikram Jaswal presenting a flawed study of S2C users”, that the NIH was “giving credence to FC”, and that the only other representative of non-speaking autism was someone who had told the world, years ago, “I can talk. I can even have a conversation with you.”

(S2C, or Spelling to Communicate, is a variant of facilitated communication very similar to another variant, the Rapid Prompting Method).

The public airing of these objections apparently hit a nerve. Judith Cooper, the head of the NIDCD and one of the three conference organizers, opened the event by admonishing presenters and participants to be “respectful” and not return to “any past debates.” She added:

I am personally appalled and somewhat saddened about some of what is appearing in social media related to our media and this sort of attack behavior will not be tolerated here.

Reminders to be respectful and not get into “past debates” were repeated by Dr. Cooper and others over the course of the two-day conference.

Videos of the conference have been archived and you can watch them here and here. In what follows, I’ll highlight the ways in which the event promoted facilitated communication—primarily a variant of FC known as the Rapid Prompting Method (RPM)—and then say a bit about the aftermath and how far some of this might go.

The first session, the “Panel of Stakeholder Perspectives”, opened with a young man who has a long history of communicating via facilitated communication and the Rapid Prompting Method (RPM): a history that Dr. Cooper and others in charge could easily have looked up. This person, who (as it later became clear) had someone sitting next to him, pushed a button on his computer that read out a pre-recorded message.

The next presenter was Matthew Belmonte, the sibling of an RPM user and a long-time supporter of RPM. Belmonte acknowledged that sometimes RPM “clearly” lends itself to facilitator influence, but also stated that it sometimes enables authentic communication. (If you peruse this website’s Research pages, you’ll see that there’s no evidence for the second claim). The question, Belmonte stated, isn’t whether RPM is valid, but “under what circumstances.” He then cited moments in which he and his brother shared memories: during such moments, he assured us, his brother’s messages were “clearly valid.” What’s needed, therefore, is to “reconcile the very many positive case reports” of successful RPM with what Belmonte characterizes as the “binary tests of message passing” that may not be sensitive to “subtle issues.” (He doesn’t elaborate on what those are). The answer, Belmonte suggests, is what would amount to indirect validation studies. He cites Vikram Jaswal’s highly flawed eye tracking study.

The next few presenters endorsed the importance of being “respectful.” They alluded, respectfully, to the first presenter, raising no concerns about whether the recorded message was authentically his.

The next session, “Novel intervention approaches for minimally verbal/non-speaking individuals”, was much more evidence-based. It included several fantastic presentations, among others, on augmentative and alternative communication and treatments for speech apraxia by Janice Light, Karen Chenausky, and Howard Shane. Dr. Shane later resigned from the NIDCD group in protest, not wanting to be associated with the event’s platforming of FC pseudoscience.

In the Q & A that followed, Belmonte reappeared, interjecting the FC-friendly assertion that there’s been too much emphasis on autism as a social communication disorder and not enough attention paid to sensorimotor problems. (FC depends on a redefinition of autism either as some kind of motor or sensorimotor disorder—there’s more about that elsewhere on this website).

Day 2 of the NIDCD event returned to evidence-informed presentations on research design and outcome measures, followed by a Q & A by Stephen Camarata and Amy Lutz. Dr. Lutz (who also spoke in the Q & A at the end of the discussion) pointed out that the presentations had overlooked a key factor in severe autism: intellectual disability. She noted that the insistence on an intellectually intact mind is one of the factors behind the resurgence of facilitated communication. She also emphasized the importance of ensuring that interventions are evidence-based and of empirical testing to ensure that individuals are authoring their own messages. Dr. Lutz deftly raised these concerns ways that didn’t contravene the warnings about “respect” and “past debates.” (The debates about FC, and the evidence against it, date back to the 1990s).

Following Dr. Lutz’s caveats, however, was a final session that, effectively, completely defied any concerns about intellectual disability and evidence. Its agenda, in part, appeared to be to drive home the notion that non-speaking autistic individuals have fully intact minds: in particular, intact vocabularies, intact syntax skills, and intact literacy skills.

In this final act, Vikram Jaswal, an RPM promoter and the author of that highly flawed eye-tracking study, moderated a discussion with the two non-speakers mentioned above. One of them—the young man who spoke in the opening panel—is an actual non-speaker who has a long history of being facilitated through RPM. I’ll refer to him as an “RPM user”, as there is no solid evidence that he’s “graduated” from RPM and can type spontaneous answers to questions with his facilitator outside of auditory or visual cueing range. The other is an individual who is non-speaking only in the sense that she mostly communicates by typing. As she herself has attested, she is not a non-speaker as the term is generally understood: she’s able to engage in spoken conversation. I’ll refer to her, accordingly, as a “non-speaker”, scare quotes included. It’s worth adding that all three individuals are associated with the pro-FC organization Communication First: Jaswal, through his wife (the Executive and Legal Director); and the “non-speaker” and the RPM user, through their respective positions as Chair of the Board of Directors and as a member of the Advisory Council.

Either the conference organizers, Judith Cooper, Connie Kasari, Helen Tager-Flusberg, were aware of none of this, and/or, as they suggested later, what they saw through their eyes when observing the RPM user and the “non-speaker” in advance of the conference allayed any concerns. Either way, watching the conference organizers watch these individuals as they typed, I cringed at finding them so impressed, and so genuinely moved, by what they apparently considered to be authentic messages authored by authentic non-speakers providing an authentic window into the otherwise elusive perspectives of non-speaking individuals with autism.

A still image from the publicly available video recording of the NIDCD conference.

As this session proceeded, Jaswal directed questions to the “non-speaker” and the RPM user, and they responded in turn. Generally, the RPM user either

(1) tapped out a message (sometimes typing letters and sometimes selecting from a bank of pre-selected or predicted words), which his device read out word by word, or

(2) pushed a button that outputted a pre-recorded message.

But if you watched the video carefully a few times, starting at the 2-hour mark, you’ll see something odd happen that suggests a mix-up between tapped-out and pre-recorded messages.

First, the RPM user taps a half dozen times, apparently selecting words, and then hits a button that plays out this message: “I echo what [the “non-speaker”] is saying. I would add attitudes to listening are important.” (The computerized speech is hard to decipher in places, so this and later transcriptions represent my best guesses). As soon as this message starts playing, the hand of another person—presumably a person who’s been sitting next to him this whole time—suddenly enters the screen and pushes the RPM user’s hand away, quite abruptly.

A still image from the publicly available video recording of the NIDCD conference.

That hand then starts tapping on various buttons around the screen, and the recorded message stops playing. Next we hear a voice say, “Sorry, we’re having a volume issue.” (There was actually no indication of any problem with volume). The hand hits a few more buttons and then the voice says “Go ahead, try now” and the RPM user starts tapping again, about a dozen times, mostly at the same spot on the screen. The voice makes a “sk” sound—perhaps the beginning of the word “scroll”—and then, after a few more keystrokes, the hand reappears and hits a few more buttons, causing the screen to scroll down a bit. The voice then says “Go ahead, start at the top.” At that point, Dr. Kasari, shaking her head sympathetically, says “Technology… I mean it’s an issue.” The RPM user taps five more times and then the recorded replays: “I echo what [the “non-speaker”] is saying. I would add attitudes to listening are important.” But this time it keeps going:

I only agreed to participate after I got to talk at length with the coordinators. And establish a relationship helped. Without these accommodations, we are at a later disadvantage. We have limited exposure to the meeting format and we have not much time to digest the science without the materials ahead. It takes longer to type, so it is highly unlikely we will be able to time with the conversation. It creates stress, which can lead to dysregulation, which can reinforce the idea that we are not equipped to participate. If we have the opportunity to plan well, we would be fine.

Even with word prediction and/or a word bank, it would take well over five taps to generate this many words, and that raises a number of questions about just what was going on both on and off screen.

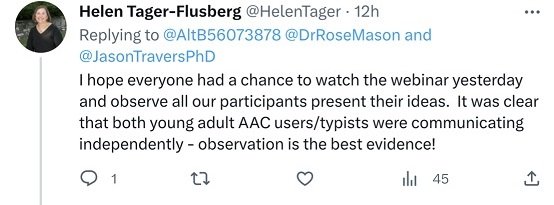

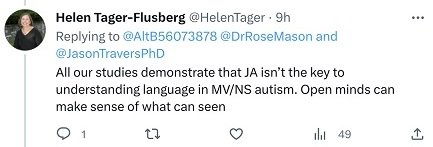

In their concluding remarks, the three organizers—Judith Cooper, Connie Kasari, and Helen Tager-Flusberg—repeatedly praised the two “non-speaking” presenters for their courageousness and stamina. Dr. Kasari also made it clear that she had spent significant time with each of them (she didn’t specify whether this was through Zoom frames or in person) and could vouch for their authenticity, their ability to communicate spontaneously, and their senses of humor. Later, on Twitter, Tager-Flusberg addressed the conference’s critics:

I found it surprising that someone with two degrees in psychology would fall for the kind of psychological fallacy normally covered in introductory psychology courses (naïve realism, AKA seeing is believing).

I also found it surprising that someone who is well aware of the importance of joint attention in language learning, and of its rarity in severe autism, wouldn’t be more skeptical of the sophisticated language typed out by the RPM user. Instead, she claims that her recent papers find that joint attention isn’t necessary for language acquisition in minimally verbal individuals.

Interested readers are invited to see if they can locate any such papers.

On several occasions during the conference, both Dr. Kasari, an expert in interventions in young children with autism, and Dr. Tager-Flusberg, an expert in language acquisition in autism, remarked on the amazing heterogeneity of non-speaking autism. Their implication, presumably, was that only a small fraction of non-speakers with autism are capable of the linguistic output produced by the two (“)non-speaking(“) participants. If most autistic non-speakers have somehow mastered this much language, that would be much more academically problematic: it would overturn decades of research into autism and language acquisition, including much of their own research.

So I’m curious what happens if Drs Tager-Flusberg and Kasari learn that practitioners of S2C, a close cousin of RPM, are claiming a 100% success rate extracting complex language from severely autistic kids, and 100% success, as well, with Downs Syndrome. Consider, for example, S2C practitioner Dawnmarie Gaivin, President of the Board of the Spellers Freedom Foundation. Ms. Gaivin stars both in J.B. Handley’s S2C miracle cure memoir, Underestimated, and in the movie Spellers, based on that memoir. Given all this exposure, perhaps what she says in a recent interview (at just past the 16-minute mark) will eventually reach the ears of Drs Tager-Flusberg and Kasari:

I haven’t met a single person nor has my mentor, previous mentors or any of my colleagues met a single person who actually meets a cognitive disability diagnosis once given this reliable method of communication... I even have multiple students in my practice with Downs Syndrome my practice and none of them have an intellectual disability either. So far it’s turning out to be Apraxia with them as well.

But here’s the question (and at this point I really don’t know the answer):

Just how extraordinary does a claim need to be for “observation is the best evidence” to become as transparently ridiculous as it is to people watching a magic show?